最近在学习python爬虫的协程部分,所以拿一个小说网站来练手。

首先使用同步爬取抓取小说章节所有的url,然后用异步的方法来保存小说内容。以txt文件保存。

食用方法:

python环境为 python 3.9

用到的库有requests,BeautifulSoup,asyncio,aiohttp,aiofiles,使用源码要先安装库

遇到的问题:

小说一共有1400+章节,但是每次爬取都只能爬1200+左右,估计是timeout错误或者400,但是400的话我用浏览器能打开,不知道怎么解决,希望有大哥你能够帮忙解决一下。

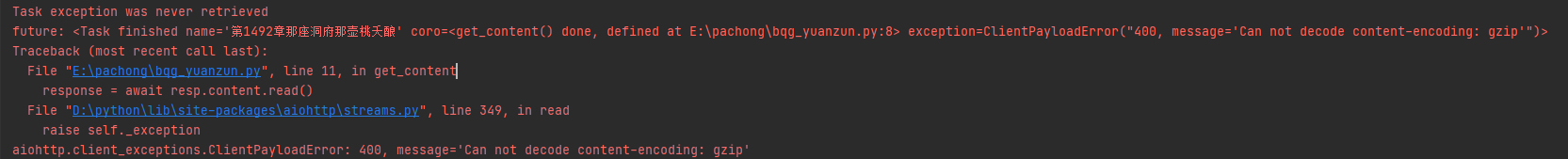

报错异常

Task exception was never retrieved

future: <Task finished name='第1492章那座洞府那壶桃夭酿' coro=<get_content() done, defined at E:\pachong\bqg_yuanzun.py:8> exception=ClientPayloadError("400, message='Can not decode content-encoding: gzip'")>

Traceback (most recent call last):

File "E:\pachong\bqg_yuanzun.py", line 11, in get_content

response = await resp.content.read()

File "D:\python\lib\site-packages\aiohttp\streams.py", line 349, in read

raise self._exception

aiohttp.client_exceptions.ClientPayloadError: 400, message='Can not decode content-encoding: gzip'

代码贴出

import requests

from bs4 import BeautifulSoup

import asyncio

import aiohttp

import aiofiles

async def get_content(xs_url, name):

async with aiohttp.ClientSession() as session:

async with session.get(xs_url) as resp:

response = await resp.content.read()

soup = BeautifulSoup(response, "html.parser")

content = soup.find("div", id="content")

data = content.text.split()

data = "\r\n".join(data)

async with aiofiles.open(f"novel/{name}.txt",mode="a",encoding="utf-8") as f:

await f.write(data)

print(f"已经下载{name}")

async def main(url):

resp = requests.get(url)

soup = BeautifulSoup(resp.text, "html.parser").find("div", attrs={"id": "list"}).find_all("dd")[9:]

tasks = []

print("开始创建异步任务")

for i in soup:

xs_url = url + i.find("a").get("href").lstrip("/258").lstrip("02/")

name = i.find("a").text

tasks.append(asyncio.create_task(get_content(xs_url, name),name=name))

# print(url + xs_url,name)

await asyncio.wait(tasks)

print("结束异步任务")

if __name__ == '__main__':

print("开始执行")

main_url = "http://www.biqugse.com/25802/"

loop = asyncio.get_event_loop()

loop.run_until_complete(main(main_url))

|  发表于 2022-8-15 22:35

发表于 2022-8-15 22:35

发表于 2022-8-16 11:44

发表于 2022-8-16 11:44

发表于 2022-8-16 13:02

发表于 2022-8-16 13:02

|

发表于 2022-8-16 20:53

|

发表于 2022-8-16 20:53

发表于 2022-8-17 10:09

发表于 2022-8-17 10:09

|

发表于 2022-8-25 16:21

|

发表于 2022-8-25 16:21